NAACL papers I'm excited for

28 Apr 2018I just found out my company is sending me to NAACL! Thank you, Networked Insights 😁. The list of accepted NAACL papers came out about a month ago and more and more papers are showing up on arXiv every day.

Here are 10 interesting-sounding papers and what I could find out about them.

A corpus of non-native written English annotated for metaphor

Beata Beigman Klebanov, Chee Wee (Ben) Leong and Michael Flor

Interesting to note that Beata Beigman Klemanov (what an iconic name by the way) works at ETS. It makes sense to me that the people who make those tests would be into this kind of annotation. It’s also cool that it’s non-native English because you don’t see a lot of datasets that are explicitly that and I don’t know what percentage of English speakers are non-native but I’m sure it’s a lot*.

* Holy #*@%! L2 English speakers outnumber L1 speakers by a ration of 3 to 1!

Dear Sir or Madam, May I introduce the YAFC corpus: Corpus, benchmarks and metrics for formality style transfer

Sudha Rao and Joel Tetreault

Joel Tetreault works at Grammerly which makes software that does what they call writing enhancement which surely includes spelling and grammar, but perhaps also some tooling around formality?.

Sudha Rao is an early PhD at UMD advised by Hal Daumé which makes me incredibly jealuous. She also interned at Grammarly last summer which is surely where this work comes from. Also, her thesis project includes grounding which is a favorite topic of mine.

ATTR2VEC: Jointly learning word and contextual attribute embeddings with factorization machines

Fabio Petroni, Vassilis Plachouras, Timothy Nugent and Jochen L. Leidner

I’m always interested in work that tries to capture contextual information, especially when it’s giving structure to word embeddings, which it sounds like this might. The authors have released their code on github but the readme is all business and I don’t know enough about the topic to tell from the code what is going on, so I’ll wait.

Deep contextualized word representations

Matthew Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee and Luke Zettlemoyer

I was a little skeptical of this work at first, I mean hasn’t this been done already? But I’m not sure any of those embeddings proved to be so useful for a broad variety of tasks, as this approach claims to. Also they’ve put both code up in both TensorFlow and PyTorch with tutorials and everything. Way to go AllenNLP 😊

Diachronic usage relatedness (DUREL): A framework for the annotation of lexical semantic change

Dominik Schlechtweg, Sabine Schulte im Walde and Stefanie Eckmann

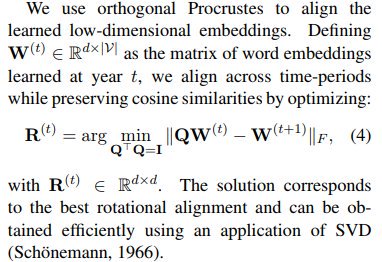

These folks take a different approach from the diachronic semantics papers I wrote about a few weeks ago. Instead of comparing embeddings, they compare usage directly. How do they do that?

- Take a bunch of usages of a word (with context) from one time period

- Pair them up with usages from another time period

- Make some measure of comparison on usages

- Look at the average of this mesaure across all pairs

- Profit???

The authors come up with two different measures to do this dance with. And they claim one of them can tell the difference between innovative and reductive semantic change.

Attentive interaction model: Modeling changes in view in argumentation

Yohan Jo, Shivani Poddar, Byungsoo Jeon, Qinlan Shen, Carolyn Rose and Graham Neubig

When I read this title I knew immediately that they used /r/changemyview. An earlier paper by some of the Cornell computational sociolinguistics folks comes with a really nice dataset for it (with annotations)! They are so good about releasing data that’s well documented, easy to use and not 404’d. (arXiv)

Author commitment and social power: Automatic belief tagging to infer the social context of interactions

Vinodkumar Prabhakaran and Owen Rambow

I took a look at the author’s thesis. One of the main ideas is that you can predict social power based on their level of belief in their utterances. “i.e., whether the participants are committed to the beliefs they express, non-committed to them, or express beliefs attributed to someone else”. Sounds pretty interesting and I think that’s what this paper is going to be about.

Deconfounded lexicon induction for interpretable social science

Reid Pryzant, Kelly Shen, Dan Jurafsky and Stefan Wagner

I’m very interested in this enigmatically titled paper. What is this lexicon and what does it mean for it to be deconfounded? In what way is it being induced? And we’re doing… what with it? Interpreting social science!?! Fascinating. Unfortunately, all I could find is this broken link.

There is also this Github project but but my mother told me to poke around in a code repository I know nothing about.

Colorless green recurrent networks dream hierarchically

Kristina Gulordava, Marco Baroni, Tal Linzen, Piotr Bojanowski and Edouard Grave

Dad joke level title, but atually pretty descriptive. The authors want to see if RNNs learn abstract hierarchical syntactic structure. In other words, do they understand the ways in which words build into phrases and phrases build into sentences, and how they’re connected once they do?

To test this, they test if the RNN language model can get syntactic number agreement right in meaningless (but syntactically correct) sentences like “The colorless greed ideas I ate with the chair sleep furiously”. In this example, if the RNN predicts sleep, which agrees with ideas rather than sleeps, then that’s evidence the RNN understands the syntactic structure.

Deep dungeons and dragons: Learning character-action interactions from role-playing game transcripts

Annie Louis and Charles Sutton

Well this is clearly awesome. I’m not sure exactly what it means for a character to interact with an action, but I’m excited to find out. Couldn’t find so much as an abstract online, and the authors’ past work doesn’t have any big hints so we’ll have to wait to find out.

That’s all for now! But there are many many more papers that sound interesting. I’ll follow up in June with another blog post about what stood out at NAACL 😊